Mastering OpenSearch at Scale: A Practical Guide for Enterprise Search Solutions

When queries take too long, they block real-time business decisions. We share architecture and optimization patterns drawn from real warehouse management systems processing millions of records.

Fast and effective search and analytics capabilities are critical to how businesses operate and make decisions. OpenSearch provides the scalability needed for searching millions of records while maintaining fast response times. We've proven this at scale, implementing it for Ruggable's warehouse system tracking thousands of products globally. This guide shows you our proven indexing strategies and query optimizations, with practical examples from e-commerce and warehouse systems tested in production.

In this article, we cover:

- Why OpenSearch?

- Real-World Applications

- Core Architecture

- Hands-on Example: Building a Bookstore Search

- Understanding Search Relevance

- Query vs. Filter Context

- Query Types: A Practical Guide

- 1. Match Query

- 2. Match Phrase Query

- 3. Multi-Match Query

- 4. Term Query

- Advanced Implementation: Complex Queries

- Performance Optimization: Lessons from Production

- Conclusion

Why OpenSearch?

OpenSearch shines when your system needs to search through millions of records, like server logs, product catalogs, or customer data, and return results in milliseconds. At its core, OpenSearch is a distributed search and analytics engine designed to handle large-scale data with remarkable efficiency.

If you've been in the search space before, you might wonder: "Why OpenSearch and not Elasticsearch?" This stems from recent changes in Elasticsearch's licensing model. Elasticsearch was once the go-to tool for open-source data search. That changed when Elastic updated its licensing, moving away from open-source principles. In response, AWS forked the last open-source version of Elasticsearch and birthed OpenSearch, a fully open-source project. While the two tools started from the same foundation, they've since evolved independently, with distinct features and roadmaps.

When evaluating both options for our client implementations, we chose OpenSearch for these key reasons:

- Deep AWS Integration: Native AWS service integration reduces deployment complexity.

- True Open Source: Apache 2.0 license ensures long-term availability and community access.

- Community Innovation: Active contribution framework for custom feature development.

- Enterprise-Grade Support: AWS backing while maintaining open-source flexibility.

- Cost Optimization: Predictable AWS pricing with built-in infrastructure synergies.

Beyond its licensing and community, OpenSearch offers deployment flexibility. You can opt for a managed service in the cloud, like Amazon OpenSearch Service, for a hassle-free experience where AWS handles the operational heavy lifting. Alternatively, an on-premises deployment provides greater control over your data and infrastructure, which might be necessary for organizations with specific compliance needs. The choice depends on factors like budget, technical expertise, and the level of control required.

Real-World Applications

At Whitespectre, we've leveraged OpenSearch to build large-scale, high-performance search solutions for our clients, including a comprehensive warehouse management system for Ruggable, a leading home goods manufacturer. This system manages inventories across multiple global warehouses, handling complex searches across materials, orders, and locations, resulting in improved operational efficiency and reduced order fulfillment time. While we'll start with a straightforward example to establish core concepts, we'll build up to the complex querying patterns we use in production systems like these.

Common applications include:

- Data Exploration and Visualization: Build interactive dashboards analyzing structured data (sales records) and unstructured data (customer reviews) with sub-second response times.

- E-Commerce Search: Enable product discovery through typo-tolerant search, synonym matching, and relevance tuning, directly impacting conversion rates.

- Log Analytics: Centralize and search millions of log entries in real-time, with automated alerting on error patterns and performance anomalies.

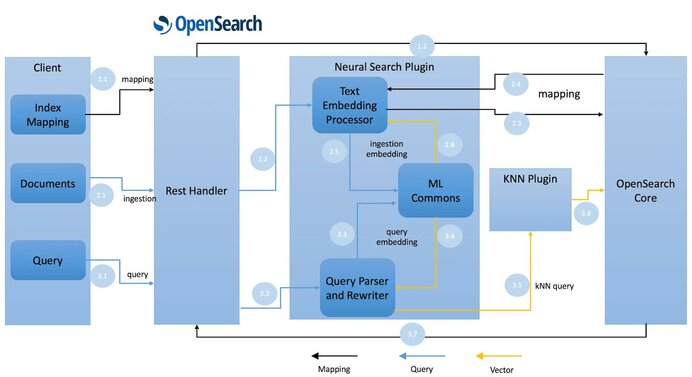

Core Architecture

OpenSearch's architecture is straightforward from an end user's perspective, though it implements complex distributed systems architecture internally. The key concept to understand is indices, which act as OpenSearch's databases. An OpenSearch index stores data in a JSON-like format, while more complex structures like Shards, Nodes, Clusters, and Inverted Indices work behind the scenes to ensure fast, reliable searches with built-in redundancy.

Hands-on Example: Building a Bookstore Search

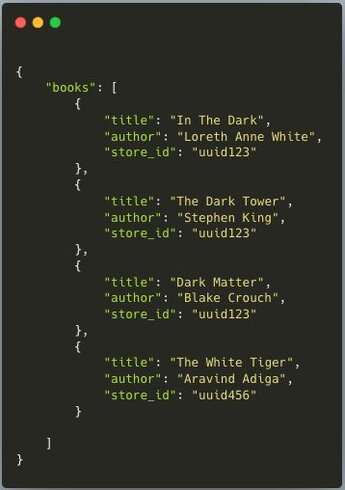

We'll build a multi-store bookstore search system, starting with basic inventory management and adding store-specific search capabilities. First, we'll populate our system with sample data in OpenSearch's JSON-like format. Here's our initial dataset of four books:

Setup

Setting up OpenSearch does not require a lot of boilerplate work. AWS provides templates that are easy to follow and will give configuration out of the box that will work for most people. Of course you can tweak all the configuration as you please, such as Node Availability, access control through IAM, instance types, the sort.

For our tutorial, we can run their example setup which uses simplified authentication with master password.

Side note: Make sure to select t2.small.search node instance when creating your service. That node is covered by the free tier and the tutorial baits you into creating a paid node.

Setting it all up won’t take more than 5 minutes. We just need to wait up to 30 minutes until our domain is up and running. After that, we’re ready to populate our first index!

Populating Index

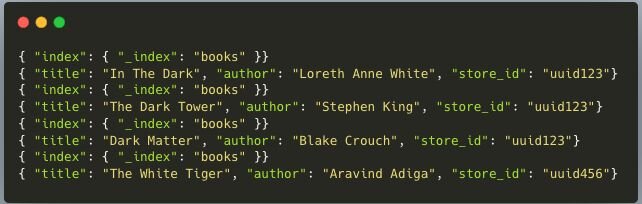

Remember that json with books we just created? Let’s format it to create the payload that OpenSearch expects.

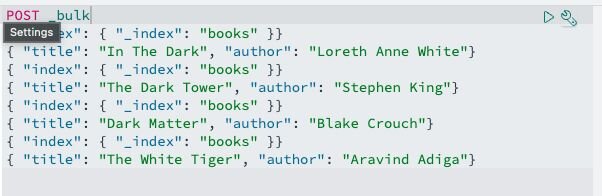

From here, we have two options to populate the index: We can make a request (even through curl, if you want) to our domain or go to the dashboard. I personally prefer the dashboard, I feel more control over the data from there, so we’ll go with this option.

Navigate to your test domain and you’ll open your OpenSearch dashboard. Now navigate to DevTools. In there we can set the request type to POST _bulk and just paste our payload underneath it. Click the play button to the side and we are ready to start querying!

Query Types: A Practical Guide

Now that we are ready to run these queries, we can either make requests from our app, which you’ll probably want for your production environment, or run them in the dashboard for testing. We can use the same DevTool dashboard where we just populated our index to run the queries below.

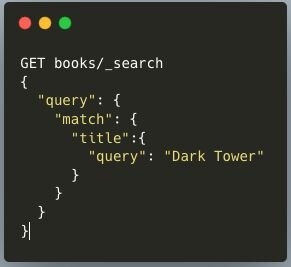

1. Match Query

Think of a Match query as your everyday search bar. It breaks down what the user types and looks for similar words in your documents that match any of the string’s terms. For example, if a customer types "Dark Tower", Open Search will find any documents that meet “Dark” OR “Tower” and give them a relevance score.

For briefness, we will not include the query results for all of the sample queries in this tutorial, but this is what the response looks like for the query above. The response structure for all queries is always the same.

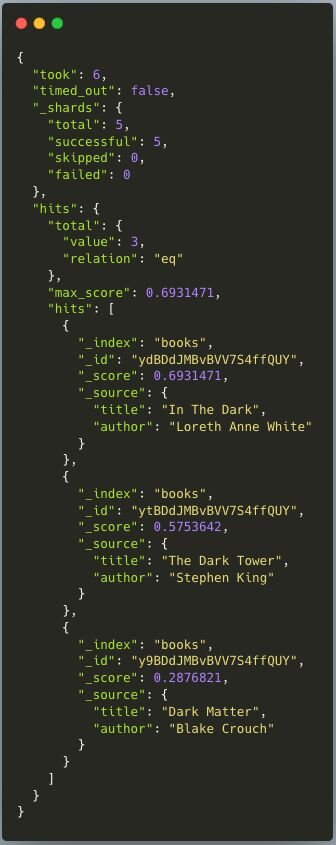

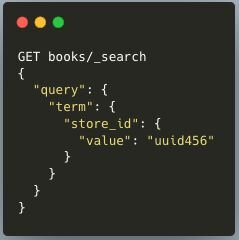

2. Match Phrase Query

This one's more strict - it looks for words in exactly the order they're typed. If someone searches for "Dark Tower," it'll find your book, but "Tower Dark" won't return any results. It's essential for matching exact phrases like book titles or product names where word sequence affects meaning.

3. Multi-Match Query

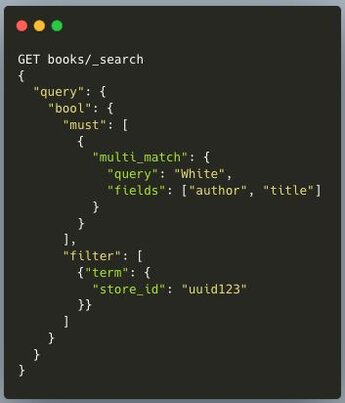

Sometimes you want to search across multiple fields at once. Maybe your customers should find "White" whether it's in the title or author name. Multi-Match lets you do exactly that. In our sample bookstore, this query will return two books. It matches the author Loreth Anne White and the title The White Tiger.

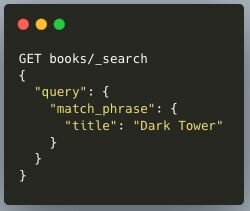

4. Term Query

When exact matching is crucial - like searching for those ISBNs in our bookstore - Term queries are your friend. They don't try to be clever with analysis; they look for exact matches. This would return only The White Tiger in our bookstore, since that’s our only book for store 456.

Understanding Search Relevance

When you search in OpenSearch, each result gets a "Relevance Score" - think of it as a matching percentage. The higher the score, the better the match to your query. This is what we call "Query Context." For example, if you search for "White" in our bookstore database, you'll find "In the Dark" by Loreth Anne White and "The White Tiger" by Aravind Adiga. Here's where it gets interesting: you can decide whether matches in titles should score higher than in author names, tailoring the search to what your users value most.

Query vs. Filter Context

While the Relevance Score determines how well something matches, another important concept is "Filter Context." Instead of asking, "How well does this match?" filters simply ask, "Does this match?" - a yes or no answer. Filters are great for narrowing down your dataset before running more complex queries, significantly improving performance. For instance, if you're searching for "White" in our bookstore, you might want to filter for books only in a specific store or with stock available.

Advanced Implementation: Complex Queries

Our little bookstore is growing, isn't it? We now have a few stores open, and the searches are becoming increasingly complicated. Say a customer wants to find books with "White" in the title or author name, but only in store123. Here's how we'd write that:

This query combines several concepts:

- Searches for "White" in titles and authors

- Filters for uuid123

- Returns results ordered by relevance

Notice that the query above would not return The White Tiger, even if it has “White” in the title. That is because we filtered by store 123 and that title is only in store 456.

Boolean queries can use these parameters:

- must: All specified queries must match

- should: At least one query should match

- must_not: Excludes documents that match this query

- filter: Like "must" but doesn't affect scoring, making it faster

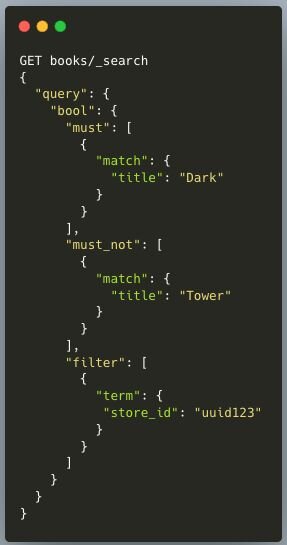

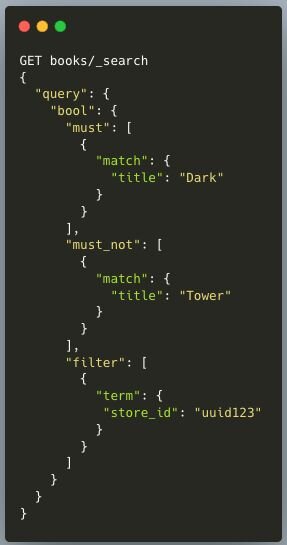

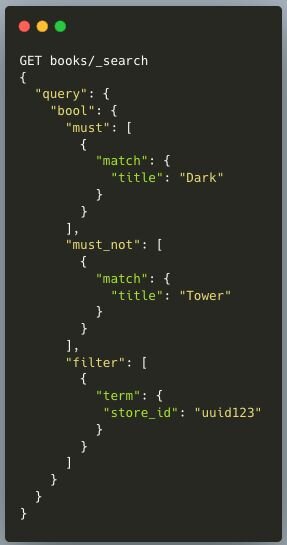

Looking at our sample data, let's try one final query:

Can you guess which books this query would return? That’s right, it would give us “In The Dark” and “Dark Matter” but not “The Dark Tower”. The beauty of boolean queries is how they let you create complex search logic with straightforward, readable syntax.

Performance Optimization: Lessons from Production

Having scaled from basic bookstore searches to warehouse systems processing millions of daily queries, we've identified key performance patterns:

Filter Usage: Implement filters before relevance calculations to leverage result caching and boolean logic, reducing query time by up to 60%. Here's what this looks like in practice.

Non-optimized query:

Optimized query with filter:

Beyond query optimization, we've found these practices essential for maintaining performance at scale:

- Proper Sharding: Match your shard size to your data volume. Too many small shards create overhead, while too few large shards limit parallelization.

- Index Aliases: Use aliases to swap between indices during reindexing, preventing downtime during data updates.

- Health Monitoring: Track query latency and cluster health metrics to catch issues before they impact users.

Data Lifecycle: Implement index lifecycle policies to manage growing datasets efficiently.

Conclusion

These patterns scale from basic product searches to complex warehouse management. Building search functionality is iterative: start with simple match queries to establish a baseline, add filters where monitoring shows performance bottlenecks, and use real query patterns from your users to guide optimization. Every deployment will face unique challenges, but focusing on your core use cases and measurable performance metrics will keep your implementation on track.

Performance Optimization: Lessons from Production

Having scaled from basic bookstore searches to warehouse systems processing millions of daily queries, we've identified key performance patterns:

Filter Usage: Implement filters before relevance calculations to leverage result caching and boolean logic, reducing query time by up to 60%. Here's what this looks like in practice.

Non-optimized query:

This query combines several concepts:

- Searches for "White" in titles and authors

- Filters for uuid123

- Returns results ordered by relevance

Notice that the query above would not return The White Tiger, even if it has “White” in the title. That is because we filtered by store 123 and that title is only in store 456.

Boolean queries can use these parameters:

- must: All specified queries must match

- should: At least one query should match

- must_not: Excludes documents that match this query

- filter: Like "must" but doesn't affect scoring, making it faster

Looking at our sample data, let's try one final query:

Can you guess which books this query would return? That’s right, it would give us “In The Dark” and “Dark Matter” but not “The Dark Tower”. The beauty of boolean queries is how they let you create complex search logic with straightforward, readable syntax.

Performance Optimization: Lessons from Production

Having scaled from basic bookstore searches to warehouse systems processing millions of daily queries, we've identified key performance patterns:

Filter Usage: Implement filters before relevance calculations to leverage result caching and boolean logic, reducing query time by up to 60%. Here's what this looks like in practice.

Non-optimized query:

Optimized query with filter:

Beyond query optimization, we've found these practices essential for maintaining performance at scale:

- Proper Sharding: Match your shard size to your data volume. Too many small shards create overhead, while too few large shards limit parallelization.

- Index Aliases: Use aliases to swap between indices during reindexing, preventing downtime during data updates.

- Health Monitoring: Track query latency and cluster health metrics to catch issues before they impact users.

- Data Lifecycle: Implement index lifecycle policies to manage growing datasets efficiently.

Conclusion

These patterns scale from basic product searches to complex warehouse management. Building search functionality is iterative: start with simple match queries to establish a baseline, add filters where monitoring shows performance bottlenecks, and use real query patterns from your users to guide optimization. Every deployment will face unique challenges, but focusing on your core use cases and measurable performance metrics will keep your implementation on track.