Best Practices for Building Robust and Secure AWS Lambda Functions

Protecting a business from worst case scenarios is key when using a Lambda function to integrate a third-party event with a modern cloud application. We share best practices and the functionality you need to build a robust and secure production-ready Lambda function.

AWS Lambda functions are a powerful tool for modern cloud applications — providing serverless computing power, securely handling sensitive data, and ensuring continuous operation. They enable developers to run code without managing servers or infrastructure, and are cost-effective, scalable, flexible, and easy to deploy.

In our previous article on using AWS Lambda and API gateways to handle webhooks in a serverless environment, we shared how using Lambda functions allowed us to integrate a third-party event with our application.

In this article, we will cover the following best practices for robust and secure AWS Lambda functions:

As a starting point, here is our basic Lambda:

import { returnProduct } from './db'; export const handler = async (event, context) => { const { productIds } = event.body; for (const productId of productIds) { await returnProduct(productId); } }

Now we are going a little bit further, and complete the functionality of the Lambda that integrates both the third-party and our main app.

Our goal is to receive a list of products that have been returned to the store and mark them as returned in our application.

Having such a barebones function is only good for testing purposes and if we want our function to be production-ready, we need to address quite a few points, which right now our function is lacking - such as logging in a serverless environment, error handling, using external APIs, managing environment variables, accessing private information, and so on.

It’s critical that we do so, since from a business perspective, any time there’s an error or something goes wrong, issues will just end up on a customer support queue, with an unwanted complaint on the side. So we’ll need to prepare for worst-case scenarios and do everything we can to prevent this from happening.

Data Logging

We have a function that receives an array of strings called productIds and calls a method from an external library to make the update, but we don’t know which product or set of products is going to be processed. Also, if something goes wrong, we won't be able to know where exactly the issue shows up. Right now we don’t have any information that helps us here.

Therefore, we need to add a proper way to log all relevant events to our Lambda function. We can use console.log() to achieve this functionality. Lambda automatically sends all logs to CloudWatch without any extra steps on our end.

Each Lambda function comes with a CloudWatch Logs Group and a log stream for each function instance, so we can also pinpoint the origin of each log. And we can use a function to encapsulate the behavior of writing the log, like this:

async writeLog(message, level) { console.log( JSON.stringify({ level: level ? level.toLowerCase() : "info", message: message.toString(), }) ); }

Next, we can use it to log any relevant event or piece of information that you could find important. For example, when the payload arrives, we log the products' IDs. And only when the Lambda completes its purpose does the product get marked as returned.

import { returnProduct } from './db'; export const handler = async (event, context) => { const { productIds } = event.body; writeLog(`Product ids: ${JSON.stringify(productIds)}`); for (const productId of productIds) { await returnProduct(productId); writeLog(`Returned product: product_id: ${productId}`); } }

Base64 Encoding

By default, our Lambda assumes that the received information is either in plain text or JSON format. However, what happens if for some reason the body is in base64 encoding? Or perhaps if the third-party application decides to encode the information for security reasons, to just not send plain text over the internet. It's important to note that we cannot determine with certainty the encoding used by the source to transmit the information to our Lambda.

The API gateway accepts a isBase64Encoded param that is mostly used to send binary objects, which is sometimes - as we mentioned before - used to send encoded pieces of information. Therefore, our Lambda needs to be prepared if the source is encoded.

To know if the payload is base64 encoded, we need to check isBase64Encoded param in the payload that could be like this.

{ "body": "eyJ0ZXN0IjoiYm9keSJ9", "httpMethod": "POST", "isBase64Encoded": true, "queryStringParameters": { "foo": "bar" } ..... }

Furthermore, for the sake of keeping the code clean and legible, we can create a function for decoding the payload.

function decodeBase64(encodedPayload){ return Buffer.from(encodedPayload, 'base64').toString('utf-8'); }

And we can add a condition that, if it is encoded, requires decoding the payload first before processing it.

import { returnProduct } from './db'; export const handler = async (event, context) => { //check first if is base64 const productIds = (event.isBase64Encoded) ? decodeBase64(event.body.productIds): event.body.productIds; for (const productId of productIds) { ....... } }

Okay, so we’ve taken care of logging and proper handling of encoding base64, but what if something goes wrong and for some reason the product state can’t be updated, or the normal flow of the application is interrupted?

In this case, we need to add proper error handling and that’s what we’re going to cover in the next section.

Error Handling

When designing your Lambda function, it's important to anticipate potential errors and exceptions that might occur during its execution. Even if you have written near-perfect code, unexpected issues might still happen.

To ensure that your Lambda function can handle errors gracefully, you should design it to be resilient by providing appropriate error-handling mechanisms. This means that your function should be able to detect and handle errors, report them in a meaningful way, and provide fallback behaviors to ensure that your users can continue using your application even if the function fails.

So, we’ve just added a function to log any relevant event and handle both encoding cases, but what happens if the product ID is not found, or for some reason, the Lambda is not able to update the product state?

Right now we’re not handling any errors that may occur when calling returnProduct, so if there’s an error, we won’t notice it at all and won’t be able to trace it. If something goes wrong, our application could crash if we don’t handle errors properly.

To fix this, let's add a try-catch block in case there are any problems, or for some reason, the serverless function can’t update the products' states and add logs so we know what happened. Note that there’s also a try-catch block in our for loop, since we want to know if only individual products failed to be returned, while still completing successful ones.

import { returnProduct } from "./db"; export const handler = async (event, context) => { try { //check first if is base64 const productIds = event.isBase64Encoded ? decodeBase64(event.body.productIds) : event.body.productIds; writeLog(`Product ids: ${JSON.stringify(productIds)}`); for (const productId of productIds) { try { await returnProduct(productId); writeLog(`Returned product: product_id: ${productId}`); } catch (err) { writeLog( `An error occurred trying to return the product: ${productId}`, "Error" ); } } } catch (error) { writeLog(`An error occurred processing produts: ${error.message}`, "Error"); } };

Now we have a function that takes product information and tries to update the state. Also, it logs any relevant information and If something goes wrong, it's not going to stop the flow and it’s going to log the error.

However, there’s no guarantee that the product has been returned. We only attempted to return it. What if the product had already been returned? Or if the return failed and the status never changed?

In case one of these occurred, we’ll need to log this. Having three arrays, each for one state, could be a valid option, then we can show this information at the end of the process:

import { returnProduct, getProductStatus } from './db'; export const handler = async (event, context) => { try { //check first if is base64 const productIds = event.isBase64Encoded ? decodeBase64(event.body.productIds) : event.body.productIds; writeLog(`Products ids: ${JSON.stringify(productIds)}`); const processedProducts = []; const failedProducts = []; const skippedProducts = []; for (const productId of productIds) { try { writeLog(`Started processing: ${productId}`); const productStatus = await getProductStatus(productId); if (productStatus === ProductStatus.RETURNED) { skippedProducts.push(productId); writeLog(`Skipped processing: ${productId}`); } else { await returnProduct(productId); const updatedProductStatus = await getProductStatus(productId); if (updatedProductStatus === ProductStatus.RETURNED) { processedProducts.push(productId); writeLog(`Processed successfully: ${productId}`); } else { failedProducts.push(productId); writeLog(`Failed processing: ${productId}`); } } } catch (err) { writeLog( `An error occurred trying to return productId: ${productId}.`, "Error" ); } } } catch (error) { writeLog(`An error occurred processing produts: ${error.message}`, "Error"); } writeLog( `Processing succesful. Successful returns: ${processedProducts.toString()}, Failed returns: ${failedProducts.toString()}, Skipped returns: ${skippedProducts.toString()}.` ); }

Here we’ll be updating the status of each product individually, and keeping track of those that failed, as well as those we skipped processing simply because they were already returned.

This way, multiple calls with the same product IDs should be much quicker, as we’d only need to check that the products have already been returned. We’re also logging some very much-needed information about which products need to be reprocessed.

Still, this function could use some refactoring to improve readability and maintainability, as it’s getting too long for such a short task. For starters, we can move the code in our for loop to a separate function:

import { returnProduct, getProductStatus } from "./db"; export const handler = async (event, context) => { try { //check first if is base64 const productIds = event.isBase64Encoded ? decodeBase64(event.body.productIds) : event.body.productIds; writeLog(`Products ids: ${JSON.stringify(productIds)}`); const processedProducts = []; const failedProducts = []; const skippedProducts = []; for (const productId of productIds) { processProduct( productId, processedProducts, failedProducts, skippedProducts ); } } catch (error) { writeLog(`An error occurred processing produts: ${error.message}`, "Error"); } writeLog( `Processing succesful. Successful returns: ${processedProducts.toString()}, Failed returns: ${failedProducts.toString()}, Skipped returns: ${skippedProducts.toString()}.` ); }; const processProduct = ( productId, processedProducts, failedProducts, skippedProducts ) => { try { writeLog(`Started processing: ${productId}`); const productStatus = await getProductStatus(productId); if (productStatus === ProductStatus.RETURNED) { skippedProducts.push(productId); writeLog(`Skipped processing: ${productId}`); } else { await returnProduct(productId); const updatedProductStatus = await getProductStatus(productId); if (updatedProductStatus === ProductStatus.RETURNED) { processedProducts.push(productId); writeLog(`Processed successfully: ${productId}`); } else { failedProducts.push(productId); writeLog(`Failed processing: ${productId}`); } } } catch (err) { writeLog( `An error occurred trying to return productId: ${productId}.`, "Error" ); } };

With all this done, we test our endpoint.

And we realize that we need to go over to CloudWatch on every call to see if there were any issues, as there’s no return message for our Lambda.

We’re still missing some sort of feedback here.

So let’s add a short function to help us with this createResponse will give us a message structure, while createReturnMessage builds a readable message with data we’d want to see on each Lambda call:

import { returnProduct, getProductStatus } from "./db"; export const handler = async (event, context) => { try { //check first if is base64 const productIds = event.isBase64Encoded ? decodeBase64(event.body.productIds) : event.body.productIds; writeLog(`Products ids: ${JSON.stringify(productIds)}`); const processedProducts = []; const failedProducts = []; const skippedProducts = []; for (const productId of productIds) { processProduct( productId, processedProducts, failedProducts, skippedProducts ); } } catch (error) { writeLog(`An error occurred processing produts: ${error.message}`, "Error"); } writeLog( `Processing succesful. Successful returns: ${processedProducts.toString()}, Failed returns: ${failedProducts.toString()}, Skipped returns: ${skippedProducts.toString()}.` ); }; const processProduct = ( productId, processedProducts, failedProducts, skippedProducts ) => { try { writeLog(`Started processing: ${productId}`); const productStatus = await getProductStatus(productId); if (productStatus === ProductStatus.RETURNED) { skippedProducts.push(productId); writeLog(`Skipped processing: ${productId}`); } else { await returnProduct(productId); const updatedProductStatus = await getProductStatus(productId); if (updatedProductStatus === ProductStatus.RETURNED) { processedProducts.push(productId); writeLog(`Processed successfully: ${productId}`); } else { failedProducts.push(productId); writeLog(`Failed processing: ${productId}`); } } } catch (err) { writeLog( `An error occurred trying to return productId: ${productId}.`, "Error" ); } };

The idea is to return a useful message so that the user knows the values of processedProducts, failedProducts, skippedProducts, and if an error occurred.

Now that we’re all set, we go ahead and try our function again, only to realize we're still missing some verifications on our side since we can still get multiple unwanted calls to our functions, costing us each time, and causing other security-related issues.

This will avoid having to call the external API on each call.

Payload Verification

As we mentioned, we could have unwanted calls to our Lambda. But also what would happen if a malicious user or "man in the middle'' sends requests with invalid or malicious data? This could lead to security vulnerabilities, data breaches, and other types of attacks. We could end up with data loss, or data tampering. The Lambda could stop working, or we incur too much cost on our side.

We need to take care of that.

To address this issue, we can introduce payload verification to ensure that requests come from the right source and not some unknown third party. By validating the payload with a hash, we can improve overall security.

To validate that the payload came from the expected third party, we’ll assume the payload structure is as follows:

{ body: { productIds: string[], event: "return_products" } headers: { X-Return-Signature: string // sha-256 encoded payload using a secret key } }

What’s important here is the secret key. This key will be provided by the third-party, and stored on our end as well as a secret using AWS Secrets Manager.

To do this:

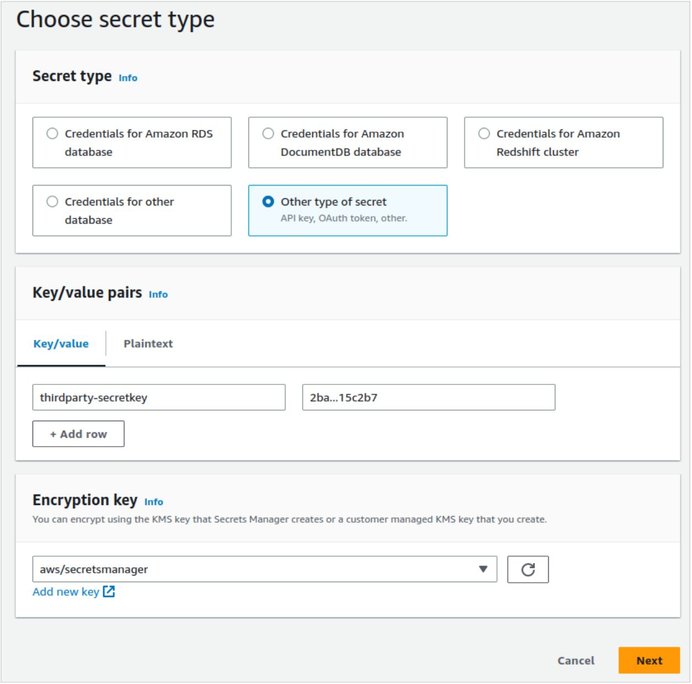

- Go to AWS Secrets Manager -> Secrets.

- Click Store a new secret:

- Secret Type: Other type of secret

-

Key: thirdparty-secretkey value:

(for example “2ba...15c2b7”)

- Encryption key: leave default “aws/secretsmanager”.

- Click Next.

- Secret name: thirdparty-secretkey

- Click Next on Step 2.

- Click Next on Step 3.

- Review and click Store.

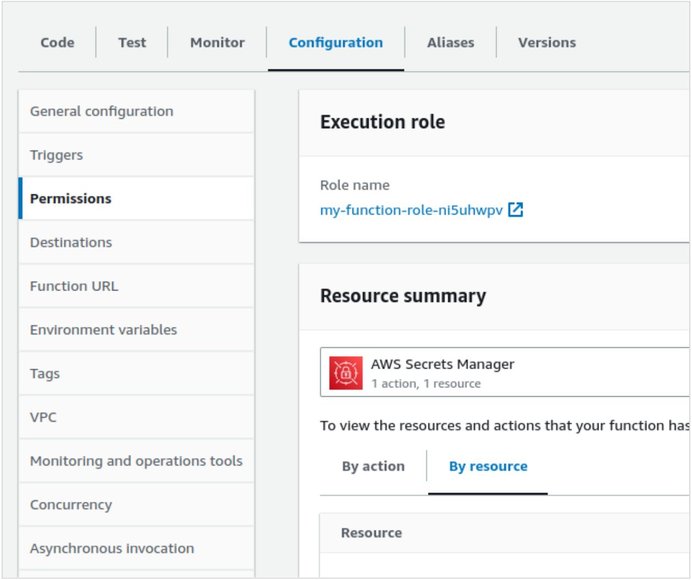

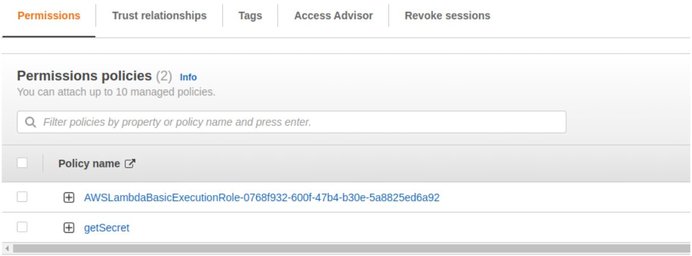

IMPORTANT: We will need to add permissions to the Lambda to access the secret manager, it can be done by adding a permission policy to the associated Lambda role.

- Go to configuration -> click on the execution role created for this Lambda:

- It will open in a new tab to the IAM role -> add an inline policy to grant access to the secret manager and getSecrets method:

- The policy will look similar to this JSON:

{ "Version": "2012-10-17", "Statement": [ { "Sid": "GetSecrets", "Effect": "Allow", "Action": "secretsmanager:GetSecretValue", "Resource": "arn:aws:secretsmanager:*:account-id:secret:*" } ] }

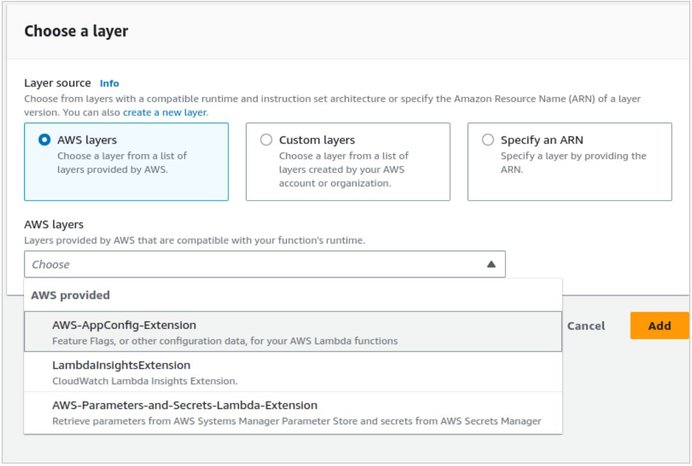

Having set that up, we will need to add the AWS Parameters and Secrets Lambda Extension to be able to retrieve and cache secrets without an SDK.

To do so, we need to go to the Layers section and add AWS Parameters and Secrets Lambda extension.

Then, to fetch the secret and use it in our Lambda, we could create a function like this:

async function getSecret(secretName) { try { const headers = { "X-Aws-Parameters-Secrets-Token": process.env.AWS_SESSION_TOKEN, }; const res = await fetch( "http://localhost:2773/secretsmanager/get?secretId=thirdparty-secretkey", { headers } ); if (res.ok) { const data = await res.json(); return data.SecretString; } } catch (err) { writeLog( `An error occurred getting the ${secretName} secret.`, "Error" ); } }

Referring to AWS’ documentation, here we’re sending the process.env.AWS_SESSION_TOKEN as a header, and then we retrieve the secret using the fetch (globally available with node 18.x runtime).

Then, using this secret we’ll validate all payloads arriving into our Lambda like so:

import { createHmac } from "crypto"; async function validateThirdParty(event) { const { body, headers } = event; const secretName = "thirdparty-secretkey"; const secret = await getSecret(secretName); if (!secret || !secret[secretName]) { writeLog("No secret key was found", "Error"); return false; } let encodedPayload = createHmac("sha256", secret[secretName]) .update(JSON.stringify(body)) .digest("hex"); const signature = headers["X-Return-Signature"]; if (!signature || signature !== encodedPayload) { writeLog("Wrong signature or secretKey", "Error"); return false; } return signature === encodedPayload; }

This way, we ensure that the third-party is the one we’re expecting. All that’s left to do is call this validation at the start of our handler:

export const handler = async (event, context) => { const productIds = (event.isBase64Encoded)? decodeBase64(event.body.productIds): event.body.productIds; writeLog(`Product ids: ${JSON.stringify(productIds)}`); const isValid = await validateThirdParty(event); if (!isValid) { writeLog("Validation failed.", "Error"); const message = { message: "Request did not come from the correct third party.", }; return createResponse(400, message); } // ... };

Request Validation

We can do this by enabling request validation in the API Gateway console. This

will ensure that requests on this POST include the

X-Return-Signature header and all requests that are not with the

required payload, body, or header will be dismissed - preventing unnecessary

calling of the Lambda.

To validate the header:

- Navigate to the Method Execution of the POST method on the API Gateway console -> Resources.

- If no Request Validator is selected, choose either validator which includes headers.

-

Under HTTP Request Headers, add a new header

X-Return-Signatureand set it as required.

- Remember that this header will be the sha-256 encoded payload, using our secret key as secret.

- Just as a tip, we can encode the payload manually for testing purposes the same way we’re doing the validation:

const thirdPartySignature = crypto .createHmac("sha256", ourSecretKey) .update(JSON.stringify(parsedBody)) .digest("hex");

Deploy the API and test the method (with any HTTP client/cURL) with and without the header. You should see the following message if the header is missing:

{ "message": "Missing required request parameters: [X-Return-Signature]" }

To validate the body:

- Navigate to the Method Execution of the POST method on the API Gateway console -> Resources.

- If no Request Validator is selected, choose the validate body and header options.

- First you need to have already created a model schema.

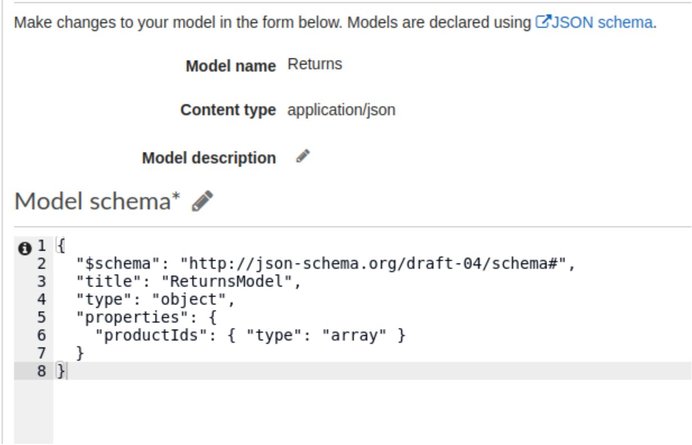

- Create the model schema

- It should be something like this:

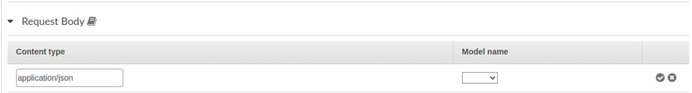

Then add a line with “application/json” and the model name.

Finally, you need to Deploy the API again.

Conclusion

As we’ve seen, ensuring the security and reliability of serverless Lambda functions is critical to the success of modern cloud applications. Even if Lambda functions are relatively easy to develop, it’s essential to apply the same level of rigor we would to any web application and to be aware that a significant amount of work is required in order to get them up and running in a production environment. In production, even small errors or vulnerabilities can have drastic consequences, such as data loss, service downtime, or security breaches.

Therefore it’s critical to carefully take measures such as handling errors effectively, logging data - all in a structured way - and verifying that requests come from the correct source, so we can make our Lambdas secure and resilient.

Such measures not only prevent potential security vulnerabilities but also help reduce costs by minimizing downtime and data loss. It is important to stay up-to-date with best practices and new security features as they become available, to continuously improve the security of Lambda functions and stay ahead of potential threats.

Enjoyed this article? Get more insight on: