Deploying AWS Lambdas Using GitHub Actions

AWS Lambda can offer unparalleled benefits as a serverless computing platform, but the manual deployment process can be complex and time-consuming, especially when dealing with multiple environments. Learn how we leverage GitHub Actions to deploy multiple AWS Lambda functions at once, dramatically improving our continuous deployment process.

AWS Lambda is the leading serverless computing platform with unparalleled flexibility, scalability, and cost-effectiveness. The manual deployment process can be complex and time-consuming and frequently companies miss the established best practices from the CI/CD world of traditional server-based applications.

In previous articles, we’ve covered how to create a simple AWS Lambda from scratch to use with webhooks, and how to make them as robust and secure as possible:

However, we’re still missing some very important steps, which we would expect to see in a production-ready solution: Continuous Deployment (CD).

In this article, we will cover the following and share how we leverage GitHub Actions to simultaneously deploy multiple AWS Lambda functions:

In our case, we want to deploy multiple functions at once in our workflow. For simplicity's sake we’ll be arranging our functions as follows, one separate file for each function:

src ├── lambda1 │ └── index.js └── lambda2 └── index.js

And we are using the following sample code from one of our previous articles:

// lambda1/index.js export async function lambdaHandler(event, context) { return { statusCode: 200, body: JSON.stringify({ message: 'Hello from Lambda 1' }) }; } // lambda2/index.js export async function lambdaHandler(event, context) { return { statusCode: 200, body: JSON.stringify({ message: 'Hello from Lambda 2' }) }; }

As the title implies, we’ll be hosting the code for these on GitHub, and deploying them to our serverless AWS account using GitHub Actions so make sure that you already have AWS credentials with proper keys because we are going to use it.

GitHub Actions

GitHub Actions is a robust automation tool that can help us automate the workflow and make our development process more efficient. With GitHub Actions, you can easily set up and configure tasks that will be triggered by events in your repository.

Setting up a GitHub Actions workflow

First, we need to tell GitHub Actions that we are going to use workflows. Create a folder .github/workflows and create a new file deploy.yaml (You can choose any name you want).

GitHub will check this folder and process all .yaml files.

(Note: you need to enable the use of workflows in GitHub account).

Since we currently have two different serverless functions we want to deploy, we’ll go with the simplest way which would be to define one job for each and we are going to zip all the content in the folders to then update the Lambda functions.

deploy.yaml:

name: Deploying multiple Lambdas on: push: branches: - main jobs: lambda1: runs-on: ubuntu-latest steps: - uses: actions/checkout@v3 - uses: actions/setup-node@v3 with: node-version: 16 - uses: aws-actions/configure-aws-credentials@v2 with: aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }} aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }} aws-region: us-east-2 - run: zip -j lambda1.zip ./lambda1/index.js - run: aws lambda update-function-code --function-name=lambda1 --zip-file=fileb://lambda1.zip lambda2: runs-on: ubuntu-latest steps: - uses: actions/checkout@v3 - uses: actions/setup-node@v3 with: node-version: 16 - uses: aws-actions/configure-aws-credentials@v2 with: aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }} aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }} aws-region: us-east-2 - run: zip -j lambda2.zip ./lambda2/index.js - run: aws lambda update-function-code --function-name=lambda1 --zip-file=fileb://lambda1.zip

Let’s unwrap this file step by step:

on: push: branches: - main

Here we define that every time any code is pushed on the main branch, this workflow will run.

jobs: lambda1: runs-on: ubuntu-latest steps: - uses: actions/checkout@v3 - uses: actions/setup-node@v3 with: node-version: 16 - uses: aws-actions/configure-aws-credentials@v2 with: aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }} aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }} aws-region: us-east-2 - run: zip -j lambda1.zip ./lambda1/index.js - run: aws lambda update-function-code --function-name=lambda1 --zip-file=fileb://lambda1.zip

Under the jobs keyword, we name each job we want to run. Since we’ll be doing one for each function, we’ll create two separate jobs.

The job runs on the latest version of Ubuntu and does the following:.

It checks out the repository code using the actions/checkout action because we need to get the project from the repository.

The second step sets up the Node.js environment by using the actions/setup-node action and specifying that Node.js version 16 should be used.

Then, it connects to the AWS account to finally run a command that compresses the Lambda function's code into a .zip file and updates the function with AWS Lambda update-function-code command.

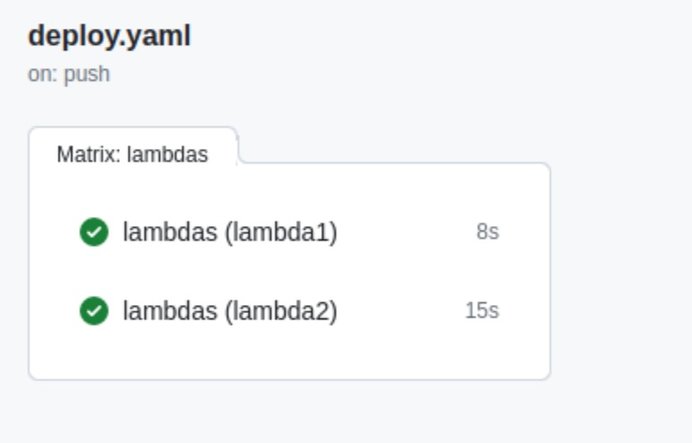

The Matrix Strategy

Obviously this approach is far from perfect. As the number of Lambda functions grows, there will be more and more jobs, and any deployment flow change will require to make the same change in each individual job. To tackle this issue and enhance code reusability, we may use a matrix strategy:

name: Deploying multiple Lambdas on: push: branches: - main jobs: lambdas: strategy: matrix: lambda: [lambda1,lambda2] runs-on: ubuntu-latest steps: - uses: actions/checkout@v3 - uses: actions/setup-node@v3 with: node-version: 16 - uses: aws-actions/configure-aws-credentials@v2 with: aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }} aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }} aws-region: us-east-2 - run: zip -j ${{matrix.lambda}}.zip ./${{matrix.lambda}}/index.js - run: aws lambda update-function-code --function-name=${{matrix.lambda}} --zip-file=fileb://${{matrix.lambda}}.zip

So, now we are telling workflows to use a strategy matrix to run each job for each lambda variable: lambda1 and lambda2.

With the matrix approach, we are using the same code for each individual function. Another great benefit is that Lambda functions will be deployed simultaneously.

Autodiscovery Lambdas

Sequential Jobs

Now that we have a functioning continuous deploying of Lambda functions, let's take a look at the following section of our workflow:

lambdas:| strategy: matrix: lambda: [lambda1,lambda2]

The issue here is we’ve hardcoded the filenames of our functions. What if we need more Lambdas? Or rename any of these files? We would need to update the array every time.

A better way to handle this is to autodiscover the Lambdas.

We can establish a convention that each Lambda must be located in a separate folder. Once we reach a consensus on this, we can generate a list of the folders, which will provide us with the complete set of Lambdas that need to be deployed.

In our workflow, we can set this up in separate jobs. One will get the list of function filenames, which will be used as a dependency on the matrix.

While using matrix, the jobs will be executed in parallel, using needs will make the jobs sequentially, because we need first to get all the Lambdas to then, in another job, use it to run the tasks.

First, we’ll need to get the filenames of our Lambdas. We can get this in many ways, we’ll be using the tree command, but you can use ls, find or any other way that fits your needs.

For example, this command will return the first level directories in the current path as an array of objects.

tree -d -L 1 -J

The result of the execution might be like this:

[ {"type":"directory","name":"lambda1"}, {"type":"directory","name":"lambda2"}, ]

In order to pass this array to the second job and allow the matrix strategy to interpret it, we must first transform it to an array of strings, as follows: [lambda1,lambda2].

An easy way to do it is using the jq command, like so:

tree -d -L 1 -J | jq -j -c '.[0].contents | map(.name)'

The tree command outputs:

[ {"type":"directory","name":"lambda1"}, {"type":"directory","name":"lambda2"}, ]

Which is then piped to jq:

| jq -j -c '.[0].contents | map(.name)'

So that we end up getting this array:

["lambda1", "lambda2"]

Now that we have the array of Lambdas created dynamically let's talk about how we can pass this information between jobs.

Outputs

We need to pass results from one job to another and the best way to do it is via the outputs keyword, which allows you to define named outputs in a job and then use those outputs as inputs in another job.

For example:

autodiscover: runs-on: ubuntu-latest outputs: lambdaArray: ${{ steps.getLambdas.outputs.lambdas }} steps: - uses: actions/checkout@v3 - id: getLambdas run: | lambdas=$(tree -d -L 1 -J | jq -j -c '.[0].contents | map(.name)') echo ::set-output name=lambdas::${lambdas}

And sets the lambdas output to the resulting JSON string by running:

echo ::set-output name=lambdaArray::${lambdas}.

The outputs keyword in the autodiscover job is then using the output of the getLambdas step, which is the JSON string that contains all the subdirectory names, to set the value of lambdaArray.

Our workflow currently generates a list of directories in our repository. According to our conventions, this list contains the names of the Lambdas that we want to deploy.

Environments and Reusable Workflows

Although our workflow is set up to monitor only the commits on the main branch, it's quite typical to have to track several other branches, such as develop or staging.

In such cases, one way to handle this could be using a single .yaml for all branches we want to be deployed. We can even use a wildcard to handle a prefix such as feature/**, hotfix/**, or any other one we use.

In our case, we are going to be using reusable workflows. This way we’ll be creating part of the workflow in a separate file, which will then be called by our branch workflows. Then, our final version will look like this:

.github/deploy.yaml:

name: Deploy Functions in Production environment on: push: branches: [main] jobs: call-update-function: uses: ./.github/workflows/update-function.yaml with: environment: main secrets: inherit

.github/deploy-staging.yaml:

name: Deploy Functions in Staging environment on: push: branches: [develop] jobs: call-update-function: uses: ./.github/workflows/update-function.yaml with: environment: develop secrets: inherit

To pass all the secrets from the parent workflow, we are using secrets: inherit. Since all the relevant files are in the same repository, the called workflow will be able to access the necessary secrets. Otherwise, we would pass them in the same way as we pass the inputs.

.github/update-lambda-function.yaml:

name: Update Function Code on: workflow_call: inputs: environment: required: true type: string jobs: get-filenames: runs-on: ubuntu-latest outputs: lambdaArray: ${{ steps.getLambdas.outputs.lambdas }} steps: - name: Checkout repository uses: actions/checkout@v3 - name: Get functions filenames id: getLambdas run: | lambdas=$(tree -d -L 1 -J . | jq -j -c '.[0].contents | map(.name)') echo ::set-output name=lambdas::${lambdas} update-code: needs: get-filenames strategy: matrix: lambda: ${{ fromJSON(needs.get-filenames.outputs.lambdaArray) }} runs-on: ubuntu-latest steps: - name: Checkout repository uses: actions/checkout@v3 - name: Setup node uses: actions/setup-node@v3 with: node-version: 16 - name: Get AWS credentials uses: aws-actions/configure-aws-credentials@v1 with: aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }} aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }} aws-region: us-east-2 - name: Zip Lambda functions run: zip -j ${{matrix.lambda}}.zip ./${{matrix.lambda}}/index.js - name: Update Lambdas code run: aws lambda update-function-code --function-name=${{matrix.lambda}}${{inputs.environment}} --zip-file=fileb://${{matrix.lambda}}.zip

Notice that the first two yaml files are pretty much the same. Having these separated will allow us to customize each workflow much more minutely. If we need to run some jobs before deploying to production that aren’t needed on staging/development, we can just add those here.

Here, the jobs defined under update-lambda-function.yaml are called in the other files, with the uses keyword.

Note: For either branch the function needs to be created beforehand, this workflow will only update existing Lambda functions. In our case we will need to have lambda1_main, lambda2_main, lambda1_develop and lambda2_develop already created for this to work. Ideally, with further improvements, we wouldn’t need to create these ahead of time. This is only a starting point for fully automated Lambda creation/deletion.

Conclusion

We’ve shown that using GitHub Actions provides a simple and straightforward way to automate the deployment of our serverless Lambda functions. This should be considered a core building block when developing serverless applications past the proof-of-concept stage. Of course, as a tech ecosystem evolves in complexity and has multiple teams involved - some of the more advanced best practices e.g. dynamic environments based on feature branches become more valuable. We’ll leave that for a future article!